ChatGPT is not as helpful as you may have hoped... or feared

ChatGPT is an AI language model that gained mass popularity at the end of 2022, when it was made available to the general public as a kind of chatbot. Its capacity to produce fluent and coherent text is far beyond what had been available before, and its expected usefulness has been progressively hyped up as time has gone on. For now, ChatGPT isn’t a viable replacement for human expertise, but it can be a useful tool.

Below we’ll take you through what ChatGPT is, its limitations, and its uses.

What is ChatGPT?

ChatGPT is a narrow, generative artificial intelligence trained on a huge dataset of text from the internet prior to September 2021.

Narrow AI is trained using a specific type of data (e.g. text, images, video, sound, etc.), and performs a task using that type of data. As opposed to a general AI, which could theoretically be trained on a broad range of data types and perform many different tasks. Specifically, ChatGPT is designed for conversational tasks and can generate human-like responses based on the prompts given to it by users.

Generative AI produce new outputs based on the rules they’ve learned from their training data. This means that ChatGPT is producing new responses to each prompt, and not just reusing snippets from its training data. This makes it an adaptable text generator, able to edit and adjust its output in response to feedback from the user.

With these concepts in mind, it’s important to remember that ChatGPT has been built and trained to produce human-like text. It’s very good at this. Its capacity to produce fluent, conversational writing can make it seem like the kind of all-knowing AI we’ve seen in science fiction. However, at its core, it’s just a more expansive version of the grammar and spelling pop-ups you see when writing an email.

The limitations of ChatGPT

ChatGPT isn’t a search engine, a digital encyclopedia, or an AI assistant. It’s a text generator. The fluency and confidence of its writing is the same whether it is relaying factual information or pure imagination, which makes it difficult to fact-check if you’re not already an expert. A compounding factor here is that ChatGPT also doesn’t know its limitations. Unless repeatedly pushed, it will pretend to be presenting factual information. It doesn’t know the difference, and we can’t expect it to.

When asked how it could be helpful to a scientific researcher, ChatGPT gave us the following benefits and limitations:

- Information retrieval

- Idea generation

- Literature review assistance

- Experimental design optimization

- Data analysis and interpretation

- Collaboration and feedback

Information retrieval: Failed.

At present, ChatGPT has been trained on information up to September 2021, but that doesn’t mean that it has direct access to that training data. Even if the information you are looking for exists in the training data, it may not be able to retrieve and present it accurately.

Idea generation: Unlikely.

ChatGPT suggests that by talking to it about your hypotheses and limitations, it can help to generate experimental ideas. This is unlikely to be a useful time-sink. As it cannot access field-specific information, any suggestions will be out of step with the norms of your field. If you find it useful to process difficult information by talking it through, and none of your colleagues are available, it might be a viable sounding board, but don’t take anything it suggests too seriously.

Literature review assistance: Failed.

ChatGPT cannot access journals, even completely open access journals from before the September 2021 cut-off. This prevents it from producing anything resembling a literature review, or assessing any articles you might be considering including in a literature review.

Experimental design optimization: Failed.

Without domain-specific information, the ability to integrate information from journals in your field, or the ability to access information after September 2021; there is no way that ChatGPT can optimize experimental design in line with current experimental findings and trends.

Data analysis and interpretation: Failed.

ChatGPT lacks domain-specific knowledge. It doesn’t know what is going on, and is more likely to produce a completely imagined interpretation than one based on whatever data you feed into it. Additionally, it can only interact with text and not with any output files produced by your experimental recording equipment.

Collaboration and feedback: Limited.

As a language model, this may be the only task ChatGPT can perform that overlaps with research. If you have a draft you’re working on and need some quick feedback, ChatGPT can be useful. With that in mind, it’s important to keep an eye on the output to ensure that it is still accurate after being edited.

None of this invalidates the power of ChatGPT as a language model. This AI generates human-like content so convincingly that it has fooled experts into accepting fake quotes, citations, and legal precedents as fact. That is an incredible, and worrying, achievement.

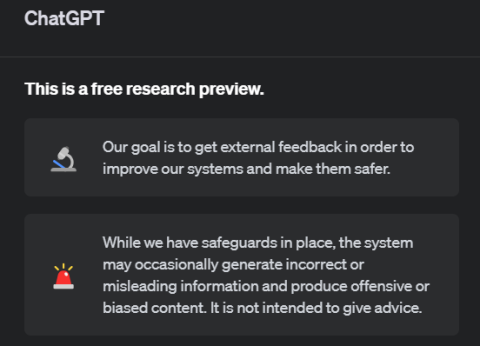

The social expectations of artificial intelligence impact how people have been using ChatGPT, but they also appear to impact how the AI presents its capabilities to users. Outside of the warning written by OpenAI about ChatGPT’s limitations as a research model, ChatGPT presents itself as having tools and understanding in line with an AI assistant from a sci-fi novel. As a society, we expected the first AI we could speak with to be as intelligent and capable as ourselves. The prevalence of this concept in the data ChatGPT was trained on seems to have established it as a guideline when generating content. As a society, we have spent so long writing about all-knowing AI that ChatGPT conforms to that idea and pretends to be all-knowing and all-capable.

The core takeaway is: ChatGPT doesn’t know or care what it is saying.

In order to ensure it is presenting information as accurately as possible, you need to know that information inside and out. This makes it unusable for almost every task it suggested, but it can still be incredibly useful for more general writing tasks.

How to use ChatGPT to save some time

Ironically, the best use of ChatGPT is in producing warm, friendly, writing. The fluffy bits that couch specific information and transition from one concept to another. It is perfect for rewriting emails, social media posts, and blog posts that you may have originally written as an outline or just a few bullet points. If you’re looking to produce content to promote your own research, it might save you some time.

So long as it doesn’t have the opportunity to hallucinate information, and you keep a keen eye on the output, ChatGPT can save you time on the writing tasks that pull you away from your research.

Related: How to promote your own research »

I asked ChatGPT to write an email with the following prompt:

- Short but friendly email to my boss

- New Zealand english

- Asking to attend SCANZ 2023 conference in Wellington

- Thursday 16 – Friday 17 November, 2023

With some further prodding it was about 80% of what I was looking for, and could be quickly edited to sound more like me.

I then gave it the abstract of an article and asked it to produce a short facebook post. This was a little more hit-or-miss, because it leans toward producing clickbait. However, with some editing I would quickly have something usable. It’s not perfect, but it gives you something solid to work with, cutting out the chunk of time it would take to wrangle your ideas onto the page to begin with.

Would you get a better end result if you wrote everything yourself? Yes. But, if you want something quickly and you don’t particularly care about it being the best possible version, save yourself the time and use ChatGPT.